The adoption of Tehama's virtual desktop solution wasn't one typically chosen by the end users who would be asked to use them on a daily basis. These decisions were made by departments that were looking to minimize risk when their staff were remotely accessing sensitive company data.

Several of our customers struggled with end user adoption since the idea of accessing a virtual desktop to deliver work was a new concept to them. Adoption wasn't necessarily mandated in all cases which made the situation even more challenging.

These insights began to surface through regular touchpoints as part of our continuous discovery model.

End User Adoption

“I don’t like watching videos if they’re more than five minutes. I’d rather read it.”

- Participant 3

“Maybe an hour of doing it on my own…can’t figure it out…look at the docs…”

- Participant 2

Methods

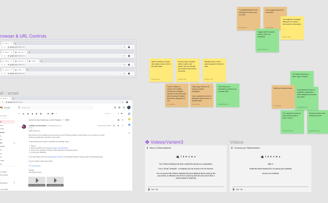

Customer interviews served as the starting point to learn how managers dealt with user onboarding and their challenges in that process. Based on what we learned, a story map was created to capture the full onboarding process. A prototype was then created for usability testing to review some new ideas from our research.

Usability tests included an interview portion, paraphrasing tests and task-based tests.

Insights

To put it mildly, the usability tests opened our eyes to the complex experience of being introduced to a new tool.

In addition to identifying issues with the onboarding flow itself, we also captured important insights around adoption. Our test participants described feeling skepticism, impatience, even a general lack of trust in management when asked to learn a new tool. Expectations were pretty clear from the feedback that any friction in learning the new tool would jeopardize the potential for its adoption. One participant said it plainly; “Well, if it’s a case where it’s tough to learn, there's a danger that it just won't be used.”

Several participants also mentioned that typically when they’re onboarding with a new company, they’re learning many new tools so it becomes a case of cognitive overload. A common point was made by the test participants around the importance of understanding ‘why’ they were being asked to learn this new tool. The lack of context only added to their impatience.

Ideation

As a team, we reviewed our notes and discussed opportunities along the user journey that could improve the experience and address some of the concerns that were raised by the participants. Even the expressions and terms that participants were using came up as potential opportunities to improve the product by reflecting their mental model - not our own!

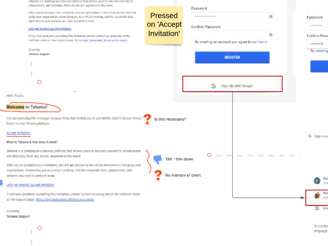

We decided to provide greater context in the welcoming email and adjusted wording on the first notification in the tool to provide the user greater context. These were identified as 'quick wins' and within a sprint of development, we were measuring the impact.

Measurement

We established a combination of qualitative and quantitative data points that would reflect the impact of our changes including the number of support tickets related to onboarding issues, user flow studies, and behavioral analytics.

UX Outcomes

Our initial iteration included minor documentation adjustments and provided only marginal improvements to the experience, as was expected. Follow-up efforts would target more impactful solutions as proposed during the ideation phase.